Global Keynote Speaker on AI

I am a global keynote speaker, author, and adviser on artificial intelligence, specialising in the future of AI and its impacts on all of us – our societies and our economies. I cover digital disruption, the future of jobs, the future of health, the impact on various economic sectors, and much more.

I have given talks on AI in 18 countries on five continents and I am available for speaking engagements worldwide and virtually.

I have written four books on AI – two novels and two non-fiction – and numerous articles, many of which you can find on this website.

Last but not least, I co-host the London Futurist Podcast, a weekly series of 30-minute discussions about AI and related subjects like the use of data, longevity research, and the simulation hypothesis. They feature informed, articulate guests talking about a future which is exciting, but not immune to risk and disappointment.

Thought-provoking talks on AI

“For our 2021 P&G Personal Development week we invited Calum to give a talk on why digital is an important aspect during these days. It was great! The senior people all stayed to the end. That almost never happens! He is a truly exceptional guest speaker. He has such charisma and at the same time humility. Calum’s focus on the power of Digitalization has never been more relevant than now. He provided such great insight and examples on where the future may bring , how and why we need to adjust to the technological wave that is coming. We were talking about him hours after his presentation and what a superb speaker he was – I couldn’t recommend him enough.” P&G

“Yesterday I had the awesome opportunity to see Calum Chace speak about the societal impact of Artificial Intelligence in the future. Hands down, the most thought provoking presentation I’ve ever seen. It is a life changing perspective of things that you don’t have to be a technology geek to appreciate.” Network Alliance, Maryland, USA

Books

SURVIVING AI

Artificial intelligence is our most powerful technology, and in the coming decades it will change everything in our lives …

THE ECONOMIC SINGULARITY

“Read The Economic Singularity if you want to think intelligently about the future.”

Aubrey de Grey

PANDORA'S ORACLE

The mind of a student named Matt has been uploaded into a supercomputer, but before he can achieve a fraction …

PANDORA'S BRAIN

Around half the scientists researching artificial intelligence (AI) think that a conscious AI at or beyond human level will be created by 2050 …

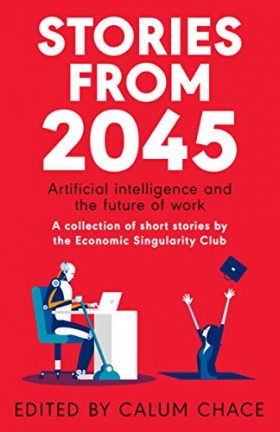

Collection of short stories

In 2017 I co-founded a think tank focused on the future of jobs, the Economic Singularity Foundation.

The Foundation has published Stories from 2045, a collection of short stories written by its members.